I’ve spent 24 hours with Apple Intelligence and while there’s lots still missing, this first beta makes me very excited for the iPhone’s AI-fuelled future

24 hours with Apple Intelligence.

When Craig Federighi revealed Apple Intelligence back in June the AI-sceptic in me was incredibly excited by Apple’s first major step towards an AI-fuelled future. The company is known for not doing things first but doing things right and a first look at the AI features built with the end user in mind piqued my interest.

After news began to circulate earlier this week that Apple Intelligence won’t be ready to launch alongside the iPhone 16 in September, so did rumors of the release date for the first developer beta to include Apple Intelligence. Now, nearly two months later I’ve finally got my hands on an, albeit very early, version of Apple Intelligence and I can’t wait to see what the future of this software holds.

iPhone 15 Pro | $999 at Apple

You won't find a better 6.1-inch iPhone on the market and the 15 Pro has all the bells and whistles ready to get the most out of iOS 18 and Apple Intelligence.

My first experience with Apple Intelligence

I’m based outside the US, so the first thing I had to do to even gain access to Apple Intelligence was change my iPhone 15 Pro Max’s language and region. After that, I restarted my device, made a cup of tea, and joined the Apple Intelligence waitlist ready for a long wait before accessing the new AI powers.

Merely a minute later, with an iPhone as hot to the touch as my freshly brewed chamomile tea, I was in — Apple Intelligence had entered my world. Now, before I give my thoughts on the AI features I’ve tried over the last 48 hours, I want to preface that this incredibly early build of Apple Intelligence is missing quite a lot of what people are excited to try. In fact, my colleague James has written about everything missing in the Apple Intelligence beta. Instead, I’m going to focus on the handful of AI features that do work on the best iPhones right now.

Summarize this. Rewrite that.

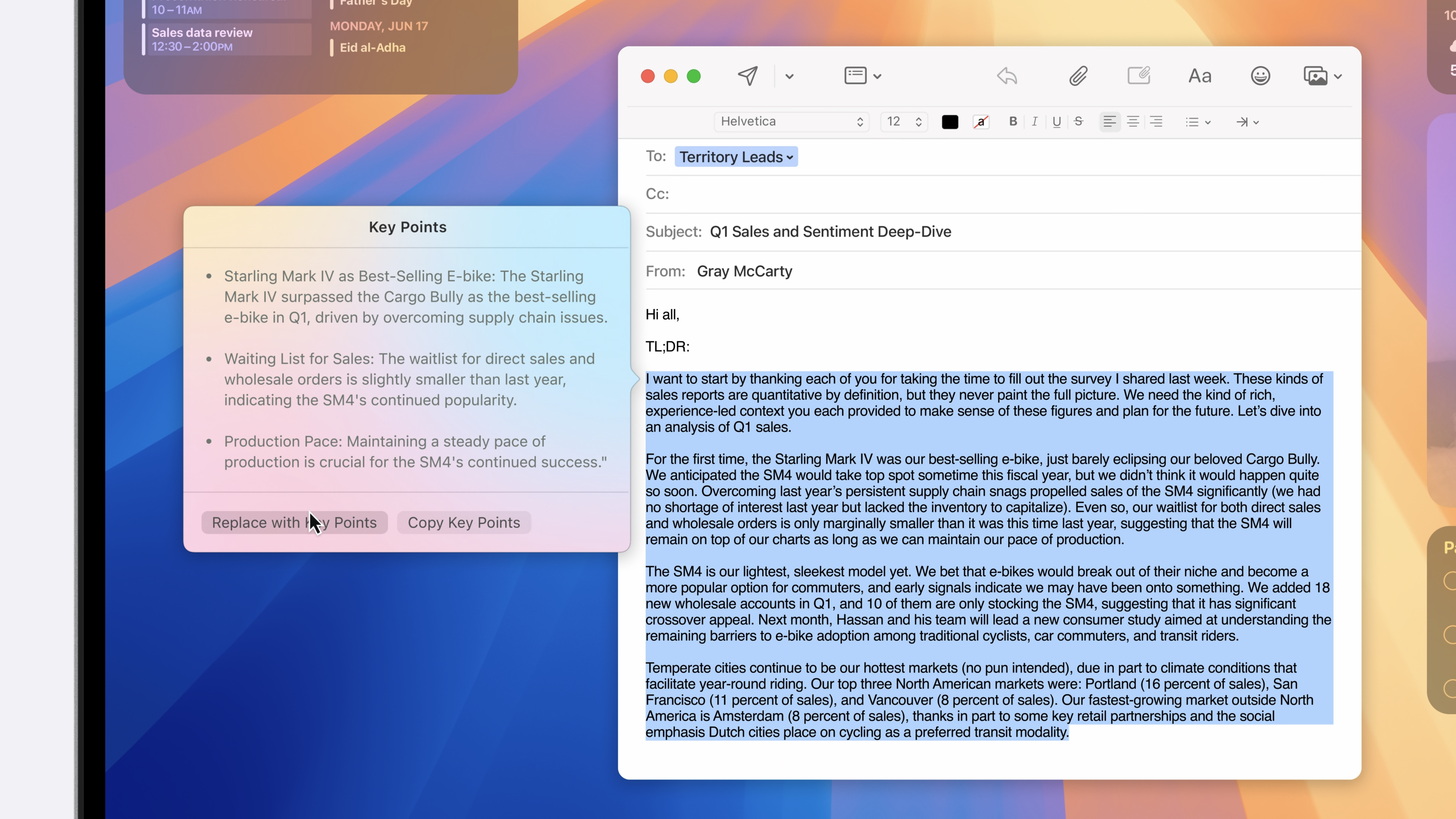

Apple Intelligence’s summary tool is pretty damn cool. It’s completely built into iOS 18.1 and can be used at any point simply by selecting text. The tool functions differently depending on the app, for example, in Safari if I find an article and open Reader, I can then summarize the text to make it easier to digest. In Mail, on the other hand, every email has a summarize button at the top that quickly gives me the key information of the message.

None of this is new to those heavily invested in AI already but having it directly embedded into iOS, iPadOS, and macOS is going to prove very useful. Obviously, there’s an ethical debate here, and I’m not really a fan of summarizing content myself, but using the tool in the Mail app could quickly become one of the best Apple Intelligence features, especially for quickly skimming key information.

Alongside the ability to summarize are the new proofreading and rewriting options. Time will tell whether this creates a weird utopia where every iPhone user communicates in the exact same tone of voice but as it stands tools like Grammarly should be quaking in their boots. With just a press of a button, Apple Intelligence reads what you’ve written and makes sure it’s grammatically correct — ideal for quickly working on the go. The rewrite feature gives you different tones of voices to choose from and while It’s going to prove useful for many, I find it deeply concerning. Just for a test I went onto iMore and copied text from one of my articles to see if Google could pick up on its origin. Pasting the text brings up the article in question but using Apple Intelligence’s rewrite feature before searching for the text doesn’t find anything from iMore’s domain. I know like the summary tool this isn’t anything new, but the fact that millions of iPhone users will gain access to these tools embedded in iOS 18.1 freaks me out for the future.

Master your iPhone in minutes

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

A better Siri, but still not the one we want

Siri’s had a complete redesign in iOS 18.1 with the voice assistant now a gorgeous full-screen animation that beams around the edges of your device. You can also activate “Type to Siri” by double tapping the bottom of your iPhone’s display, perfect for times when you want to discreetly ask something in public. Siri is going to be at the core of the Apple Intelligence experience but we’ll need to wait until 2025 to get access to Siri 2.0. The updated Siri will have awareness of personal context and the applications on your display so you can use it to get the most out of Apple Intelligence. In the WWDC keynote, Apple used an example of the AI capabilities pulling information from a poster of an event to determine whether or not someone could move their meeting and still make their daughter’s theatre performance. Apple Intelligence was able to know who the person’s daughter was, the event in question, and on top of all that contact those involved and create new calendar events. Seriously impressive stuff. In this beta, however, Siri has none of those capabilities and while it can answer more prompts than before, often opting to provide an answer rather than sending you a list of Google links, it’s still not smart enough to feel the true power of AI.

I love the new Siri animation and the flexibility of options on how to activate it, but Apple Intelligence as a whole feels a bit disjointed without the major update to everyone’s favorite (yeah, right) voice assistant.

A lack of cohesion, but that will change

Alongside the features I’ve mentioned above, Apple Intelligence can also make Movie Memories in the current iOS 18.1 developer beta, although for whatever reason it won’t work on my device. There’s also a neat feature that lets you transcribe phone calls to Notes and from my brief testing it works very well.

That said, however, as it stands Apple Intelligence feels like small enhancements to iOS that at present are nowhere near their potential. That’s obviously to be expected as iOS 18.1 isn’t expected to release until October but it does raise some questions about the future of Apple’s AI tools. My main concern is the iPhone 16 at launch, especially if Apple Intelligence’s arrival in October won’t bring with it the next generation of Siri which is core to the experience. The features on offer today, combined with headline features like Genmoji and Image Playground will be a welcome addition to the iPhone experience, but without the personal assistant aspect of Siri 2.0, I fear that we’ll quickly forget these tools are even baked in.

John-Anthony Disotto is the How To Editor of iMore, ensuring you can get the most from your Apple products and helping fix things when your technology isn’t behaving itself. Living in Scotland, where he worked for Apple as a technician focused on iOS and iPhone repairs at the Genius Bar, John-Anthony has used the Apple ecosystem for over a decade and prides himself in his ability to complete his Apple Watch activity rings. John-Anthony has previously worked in editorial for collectable TCG websites and graduated from The University of Strathclyde where he won the Scottish Student Journalism Award for Website of the Year as Editor-in-Chief of his university paper. He is also an avid film geek, having previously written film reviews and received the Edinburgh International Film Festival Student Critics award in 2019. John-Anthony also loves to tinker with other non-Apple technology and enjoys playing around with game emulation and Linux on his Steam Deck.

In his spare time, John-Anthony can be found watching any sport under the sun from football to darts, taking the term “Lego house” far too literally as he runs out of space to display any more plastic bricks, or chilling on the couch with his French Bulldog, Kermit.