FAQ: Apple Child Safety — what is Apple doing and should people be concerned?

Apple has announced a series of new Child Safety measures that will debut on some of its platforms later this year. The details of these measures are highly technical, and cover the extremely sensitive topics of child exploitation, child sexual abuse material (CSAM), and grooming. The new measures from Apple are designed "to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material." Yet their scope and implementation have raised the ire of some security experts and privacy advocates, and have been a cause for discomfort for regular users of devices like Apple's iPhone, iPad, and services like iCloud and Messages. So what is Apple doing, and what are some of the questions and concerns people have?

Apple's plans were announced very recently, and the company has continued to clarify details since. It is possible that some of the content on this page may change or be added to as more details come to light.

The Goal

As noted, Apple wants to protect children on its platform from grooming, exploitation, and to prevent the spread of CSAM (child sexual abuse material). To that end it has announced three new measures:

- Communication safety in Messages

- CSAM detection

- Expanding guidance in Siri and Search

The final one is the least intrusive and controversial, so let's begin there.

Expanding guidance in Siri and Search

Apple is adding new guidance to Siri and Search, not only to help shield children and parents from unsafe situations online but also to try and prevent people from deliberately searching for harmful content. From Apple:

Apple is also expanding guidance in Siri and Search by providing additional resources to help children and parents stay safe online and get help with unsafe situations. For example, users who ask Siri how they can report CSAM or child exploitation will be pointed to resources for where and how to file a report.Siri and Search are also being updated to intervene when users perform searches for queries related to CSAM. These interventions will explain to users that interest in this topic is harmful and problematic, and provide resources from partners to get help with this issue.

As noted, this is the least controversial move and isn't too different from say a mobile carrier offering parental guidance for searches for under 18s. It is also the most straightforward, Siri and Search will try to shield young people from potential harm, help people to report CSAM or child exploitation, and also actively trying to stop those who might seek out CSAM images and offering resources for support. There are also no real privacy or security concerns with this.

Communication safety in Messages

This is the second-most controversial, and second-most complicated move. From Apple:

Master your iPhone in minutes

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

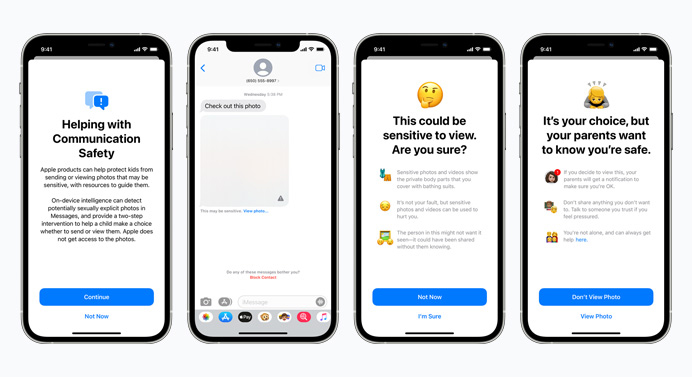

The Messages app will add new tools to warn children and their parents when receiving or sending sexually explicit photos

Apple says that Messages will blur out sexually explicit photos identified using machine learning, warning the child and presenting them with helpful resources, as well as telling them that it is okay for them not to look at it. If parents of children under 13 choose, they can also opt-in to receive notifications if their child views a potentially sexually explicit message.

Who does this apply to?

The new measures are only available to children who are members of a shared iCloud family account. The new system does not work for anyone over the age of 18, so can't prevent or detect unsolicited images sent between two co-workers, for instance, as the recipient must be a child.

Under 18s on Apple's platforms are divided further still. If a child is between the ages of 13-17, parents won't have the option to see notifications, however, children can still receive the content warnings. For children under 13, both the content warnings and parental notifications are available.

How can I opt out of this?

You don't have to, parents of children to whom this might apply must opt-in to use the feature, which won't automatically be turned on when you update to iOS 15. If you do not want your children to have access to this feature, you don't need to do anything, it's opt-in, not opt-out.

Who knows about the alerts?

From John Gruber:

If a child sends or receives (and chooses to view) an image that triggers a warning, the notification is sent from the child's device to the parents' devices — Apple itself is not notified, nor is law enforcement.

Doesn't this compromise iMessage's end-to-end encryption?

Apple's new feature applies to the Messages app, not just iMessage, so it can also detect messages sent via SMS, says John Gruber. Secondly, it should be noted that this detection takes place before/after either "end" of E2E. Detection is done at both ends before a message is sent and after it is received, maintaining iMessage's E2E. It is also done by machine learning, so Apple can't see the contents of the message. Most people would argue that E2E encryption means only the sender and the recipient of a message can view its contents, and that isn't changing.

Can Apple read my kid's messages?

The new Messages tool will use on-device machine learning to detect these images, so the images won't be reviewed by Apple itself but rather processed using an algorithm. As this is all done on-device, none of the information leaves the phone (like Apple Pay, for example). From Fast Company:

This new feature could be a powerful tool for keeping children safe from seeing or sending harmful content. And because the user's iPhone scans photos for such images on the device itself, Apple never knows about or has access to the photos or the messages surrounding them—only the children and their parents will. There are no real privacy concerns here.

The measure also only applies to images, not text.

CSAM detection

The most controversial and complex measure is CSAM detection, Apple's bid to stop the spread of Child Sexual Abuse Material online. From Apple:

To help address this, new technology in iOS and iPadOS* will allow Apple to detect known CSAM images stored in iCloud Photos. This will enable Apple to report these instances to the National Center for Missing and Exploited Children (NCMEC). NCMEC acts as a comprehensive reporting center for CSAM and works in collaboration with law enforcement agencies across the United States.

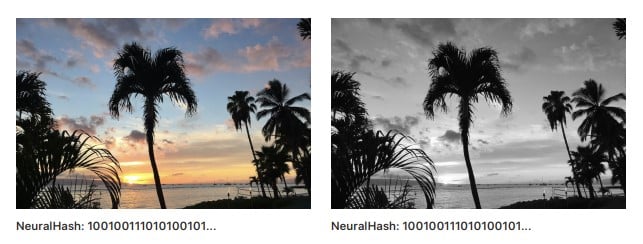

Apple's new measures will scan for user's photos that are to be uploaded to iCloud Photos against a database of images known to contain CSAM. These images come from the National Center for Missing and Exploited Children and other organizations in the sector. The system can only detect illegal and already documented photos containing CSAM without ever seeing the photos themselves or scanning your photos once they're in the cloud. From Apple:

Apple's method of detecting known CSAM is designed with user privacy in mind. Instead of scanning images in the cloud, the system performs on-device matching using a database of known CSAM image hashes provided by NCMEC and other child safety organizations. Apple further transforms this database into an unreadable set of hashes that is securely stored on users' devices. Before an image is stored in iCloud Photos, an on-device matching process is performed for that image against the known CSAM hashes. This matching process is powered by a cryptographic technology called private set intersection, which determines if there is a match without revealing the result. The device creates a cryptographic safety voucher that encodes the match result along with additional encrypted data about the image. This voucher is uploaded to iCloud Photos along with the image.

None of the contents of the safety vouchers can be interpreted by Apple unless a threshold of known CSAM content is met, and Apple says the chance of incorrectly flagging someone's account is one in one trillion per year. Only when the threshold is exceeded is Apple notified so it can manually review the hash report to confirm there is a match. If Apple confirms this it disables a user account and sends a report to the NCMEC.

So is Apple going to scan all my photos?

Apple isn't scanning your photos. It is checking the numerical value assigned to each photo against a database of known illegal content to see if they match.

The system doesn't see the image, rather the NeuralHash as shown above. It is also only checking images uploaded to iCloud, and the system cannot detect images with hashes that aren't on the database. John Gruber explains:

The CSAM detection for images uploaded to iCloud Photo Library is not doing content analysis and is only checking fingerprint hashes against the database of known CSAM fingerprints. So, to name one common innocent example, if you have photos of your kids in the bathtub, or otherwise frolicking in a state of undress, no content analysis is performed that tries to detect that, hey, this is a picture of an undressed child.

Put another way by Fast Company

Because the on-device scanning of such images checks only for fingerprints/hashes of already known and verified illegal images, the system is not capable of detecting new, genuine child pornography or misidentifying an image such as a baby's bath as pornographic.

Can I opt out?

Apple has confirmed to iMore that its system can only detect CSAM in iCloud Photos, so if you switch off iCloud Photos, you won't be included. Obviously, quite a lot of people use this feature and there are obvious benefits, so that's a big trade-off. So, yes, but at a cost which some people might consider unfair.

Will this flag photos of my children?

The system can only detect known images containing CSAM as held in the NCMEC's database, it isn't trawling for photos that contain children and then flagging them, as mentioned the system can't actually see the content of the photos, only a photo's numerical value, it's "hash". So no, Apple's system won't flag up photos of your grandkids playing in the bath.

What if the system gets it wrong?

Apple is clear that built-in protections all but eliminate the chance of false positives. Apple says that the chances of the automated system incorrectly flagging a user is one in one trillion per year. If, by chance, someone was flagged incorrectly, Apple would see this when the threshold is met and the images were manually inspected, at which point it would be able to verify this and nothing further would be done.

More questions

Plenty of people have raised issues and concerns about some of these measures, and some have noted Apple might have been mistaken in announcing them as a package, as some people seem to be conflating some of the measures. Apple's plans were also leaked prior to their announcement, meaning many people had already formed their opinions and raised potential objections before it was announced. Here are some other questions you might have.

Which countries will get these features?

Apple's Child Safety features are only coming to the U.S. at this time. However, Apple has confirmed that it will consider rolling out these features to other countries on a country-by-country basis after weighing the legal options. This would seem to note that Apple is at least considering a rollout beyond U.S. shores.

What if an authoritarian government wanted to use these tools?

There are lots of concerns that CSAM scanning or iMessage machine learning could pave the way for a government that wants to crack down on political imagery or use it as a tool for censorship. The New York Times asked Apple's Erik Neuenschwander this very question:

"What happens when other governments ask Apple to use this for other purposes?" Mr. Green asked. "What's Apple going to say?"Mr. Neuenschwander dismissed those concerns, saying that safeguards are in place to prevent abuse of the system and that Apple would reject any such demands from a government."We will inform them that we did not build the thing they're thinking of," he said.

Apple says that the CSAM system is purely designed for CSAM image hashes and that there's nowhere in the process that Apple could add to the list of hashes, say at the behest of a government or law enforcement. Apple also says that because the hash list is baked in the operating system every device has the same set of hashes and that because it issues one operating system globally there isn't a way to modify it for a specific country.

The system also only works against a database of existing images, and so couldn't be used for something like real-time surveillance or signal intelligence.

What devices does this affect?

The new measures are coming to iOS 15, iPadOS 15, watchOS 8, and macOS Monterey, so that's iPhone, iPad, Apple Watch, and the Mac. Except for the CSAM detection, which is only coming to iPhone and iPad.

When will these new measures take effect?

Apple says all the features are coming "later this year", so 2021, but we don't know much beyond that. It seems likely that Apple would have these baked into the various operating systems by the time that Apple releases its software to the public, which could happen with the launch of iPhone 13 or shortly after.

Why didn't Apple announce this at WWDC?

We don't know for sure, but given how controversial these measures are proving to be, it's likely this is all that would have been talked about if it had. Perhaps Apple simply didn't want to detract from the rest of the event, or perhaps the announcement wasn't ready.

Why is Apple doing this now?

That's also unclear, some have postulated that Apple wants to get CSAM scanning off its iCloud database and move to an on-device system. John Gruber wonders if the CSAM measures are paving the way for fully E2E encrypted iCloud Photo Library and iCloud device backups.

Conclusion

As we said at the start this is a new announcement from Apple and the set of known information is evolving still, so we'll keep updating this page as more answers, and more questions roll in. If you have a question or concern about these new plans, leave it in the comments below or over on Twitter @iMore

Want more discussion? We talked to famed Apple expert Rene Ritchie on the iMore show to discuss the latest changes, check it out below!

Stephen Warwick has written about Apple for five years at iMore and previously elsewhere. He covers all of iMore's latest breaking news regarding all of Apple's products and services, both hardware and software. Stephen has interviewed industry experts in a range of fields including finance, litigation, security, and more. He also specializes in curating and reviewing audio hardware and has experience beyond journalism in sound engineering, production, and design. Before becoming a writer Stephen studied Ancient History at University and also worked at Apple for more than two years. Stephen is also a host on the iMore show, a weekly podcast recorded live that discusses the latest in breaking Apple news, as well as featuring fun trivia about all things Apple. Follow him on Twitter @stephenwarwick9