Why iPhone 7 still focuses on true-to-life photography

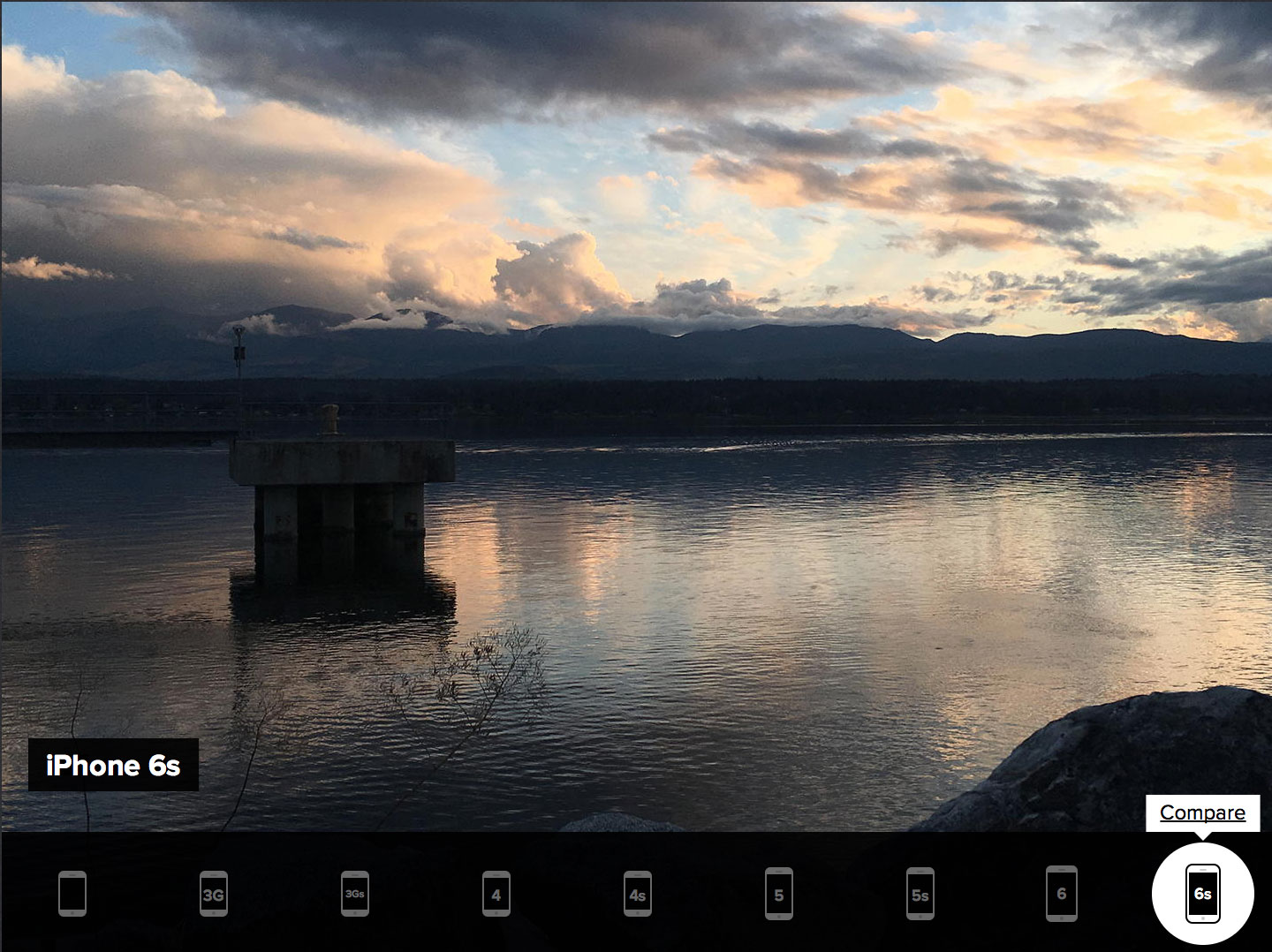

I originally wrote this explainer back in March, following some comparisons between the then-new Samsung Galaxy S7 and the then-6 month old iPhone 6s camera. Samsung and Apple both make great cameras but have very different philosophies. Apple keeps their built-in camera app as simple and easy to use as possible, and chooses to render their final photos in as natural a way as possible. Samsung, by contrast, prefers more options in their camera apps, and to crush the blacks and boost the saturation on their final photos to make them more appealing. It's a subjective rather than objective difference, and why people can disagree over which approach they like better.

Now that iPhone 7 has launched, we've gone through a new round of camera comparisons, and gotten the same kind of disagreement over the results. Some of us just love the way Samsung renders the photos, much as we love the way TV sets look in display mode on the shelves of big box retailers. Others prefer a natural base so we can choose to adjust them just the way we like, or not at all.

Six months ago, I spoke with Josh Ho from Anandtech and Lisa Bettany from Camera+ about Apple's choices when it came to the iPhone camera. You can read what they originally said below. To help frame where were are now, with iPhone 7, here's what Josh said in his Anandtech review:

Overall, the iPhone 7 camera is impressive and I would argue is holistically a better camera for still photos than the Galaxy S7 on the basis of more accurate color rendition, cleaner noise reduction, and lack of aggressive sharpening. It may not be as lightning fast as the Galaxy S7 or have as many party tricks, but what it does have is extremely well executed. The HTC 10 is definitely better than the iPhone 7 at delivering sheer detail when only comparing the 28mm focal length camera, but the post-processing has a tendency to bleed colors in low light which sometimes causes the images to look a bit soft. In daytime the iPhone 7 Plus' 56mm equivalent camera helps to keep it well ahead of the curve when it comes to sheer detail and really is a revelatory experience after years of using smartphone cameras that have focal lengths as short as 22mm and can't really capture what the eye sees.

iPhone 7 also shoots in DCI-P3 wide-gamut color, which means it captures better reds, magentas, and oranges than most other cameras. Thanks to the DCI-P3 displays on iPhone 7, the 9.7-inch iPad Pro, and the Retina 5K iMac, along with the color management built into iOS 10 and macOS Sierra, Apple also makes sure you can preserve that gamut from capture to display, and across multiple devices.

Now back to that explainer...

Balancing acts

A few years ago I was on a podcast with an optical engineer and smartphone reviewer and we were discussing cameras. What he said stuck with me — that given the wide range of options when it came to everything from pixel count to pixel size, from aperture to image signal processor, Apple was making smart choices and achieving the best balance possible.

That's proven to be true over time. We've seen cameras with too many pixels and too few, with the distortions of angles too wide and image signal processors too aggressive.

Master your iPhone in minutes

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

Apple, though, has steadfastly stuck with balance.

Rather than going for the highest megapixel number, even if it means shredding the pixel size, rather than over saturating and over sharpening, boosting shadows and exposure — rather than going for the hyper-real — Apple is obsessed with focusing on the really real.

As shown on 60 Minutes, Apple has a team of several hundred people working on the camera system. They look at hundreds of thousands of images of every scene type, in every scenario, to ensure everything from the sensor to the processor to the software is making the right decision every step of the way.

The goal is to capture an image as true-to-life as possible, with colors as natural as possible, and to make sure it looks accurate not just on the iPhone's display, but on your friend's or family member's phone, on a computer's display or a television's display, and on any prints you may choose to make.

If you want to apply effects, if you want to crush the blacks or boost the sat, Apple believes that should be your choice, not theirs. You should be able to add to it freely and not have to worry about taking it away.

Renowned iPhone photographer and co-founder of the acclaimed Camera+ app, Lisa Bettany, shared the following:

The iPhone camera picture quality has dramatically improved over the past nine iterations. It's approaching a good enough quality that photographers can now use an iPhone as working camera, instead of a tool to simply capture behind the scenes action.The most recent versions of the iPhone have added a lot of catchy things like "deep trench isolation" to make clearer and more vibrant images and they have succeeded. Images are more true to life now.

According to Joshua Ho, senior mobile editor at Anandtech, there's a lot going into that:

Apple is clearly focusing on a balanced camera. It's one thing to chase numbers but in cameras nothing is free. It's very easy for manufacturers to "game" things like megapixel counts. I'm not going to name any names here, but the examples I'm thinking of tend to have almost a third of the photo out of focus relative to the center. Likewise, by increasing aperture, you're going to inevitably increase issues with chromatic aberration and other forms of distortion.Apple tends to avoid playing these marketing games. Across the board, Apple's camera consistently produces images with natural post-processing, relatively high color accuracy, and competitive levels of detail. Other manufacturers definitely beat Apple in some places, but tend to fall short in areas like image processing or other areas where attention to detail is critical to good photos and videos, and good user experience.

After effects

Apple is in the enviable position of both making their own chips, the A-series, and being able to deploy those chips in every phone, in every region. That leads to remarkable control of the complete pipeline, and remarkable consistency in results across the board.

Some companies put bigger lenses up front. Some put entire server farms at the end. Apple wants to take what it can capture up front and give you the best result possible before it gets uploaded anywhere, if it gets uploaded anywhere at all.

If you choose to, you can add filters and effects from a variety of amazing third party apps. You can even add external lenses like wide angle, zoom, fisheye, and more. That lets you get the best of all worlds — a natural, true-to-life image that you can modify if and as desired. Or not. It's entirely up to you.

When it makes sense and doesn't introduced disruptive complexity, Apple does bring new features like easy-to-take panoramas, time-lapses, iCloud Photo Library, manual controls, and most recently, Live Photos.

When technology matures, and Apple can maintain their balance and focus on naturalness, they increase megapixels or pixel size, or widen the aperture. If any of those things cause inconsistency or distortions, even if the numbers look great on a spec comparison, Apple waits.

Shots on shots

No one camera, like no one phone, will appeal to everyone, and it's important that we have many different companies trying many different approaches. That's what gives customers options and pushes everyone to do better.

There are things I'd love to see Apple add to the iPhone camera. Faster access would be terrific. A double click of the sleep/wake button, for example, could launch you right into shooting mode.

So would the ability to automagically save Live Photos, Bursts, or slide shows as videos or animated GIFs. Because, fun.

Lisa would love to see a way to further reduce or eliminate the blocky pixelation she still finds in images, especially in skin tones. Also:

The one major hurdle of the iPhone camera has always been the fixed aperture which hinders our creative control. I'd be thrilled to see adjustable aperture in the next iPhone version.

Josh, clearly trolling, thinks Apple could elect to just go with a bigger camera hump. More likely, though:

Apple is pretty close to the limit of what's possible with a single conventional Bayer CMOS camera. To really push the envelope I'd be interested in seeing systems with dual cameras, new color filter arrays, and other emerging technologies.

One area where Apple is already doing exemplary work is accessibility. Where some might simply assume the blind wouldn't need a camera, Apple realized everyone has family and friends they may want to share photos with. So, Apple made the Camera and Photos apps accessible to the blind as well. I'm curious how much further Apple can take that?

Keeping focus

Regardless of where Apple goes next, it's the end result that has to matter most — that we're able to capture the memories and moments we want to capture, accurately and easily, and in a way that we can save and share them quickly whenever and with whomever you want.

Portrait mode for iPhone 7 Plus ships as part of iOS 10.1 this fall. Can't wait to see what the team does for iPhone 8

Rene Ritchie is one of the most respected Apple analysts in the business, reaching a combined audience of over 40 million readers a month. His YouTube channel, Vector, has over 90 thousand subscribers and 14 million views and his podcasts, including Debug, have been downloaded over 20 million times. He also regularly co-hosts MacBreak Weekly for the TWiT network and co-hosted CES Live! and Talk Mobile. Based in Montreal, Rene is a former director of product marketing, web developer, and graphic designer. He's authored several books and appeared on numerous television and radio segments to discuss Apple and the technology industry. When not working, he likes to cook, grapple, and spend time with his friends and family.