I want handwriting recognition on the iPhone and iPad — even if it stinks

When I was a kid, I got my Dad's old Newton MessagePad 2100 — Apple's original personal digital assistant — as a "hand-me-down". I put that phrase in quotes because it really wasn't so much a hand-me-down as it was a "hand-it-over": After a year of intermittently playing with his 2100, I claimed it for my own.

While I may have... exaggerated some of the Newton's abilities to my awed classmates — I distinctly remember saying to a teacher "I'm going to scan the blackboard and have the Newton take a picture of your notes", ha ha — in 1999, there was nothing I loved more than demonstrating its handwriting recognition. It was almost guaranteed a "We're living in the future!", if not a "Oooo, let me try!"

@settern pic.twitter.com/kVY2TkLQ5f@settern pic.twitter.com/kVY2TkLQ5f— Christoph Wiese (@xtoph) March 8, 2016March 8, 2016

Much as I hated longform writing and as terrible as my penmanship was, there was still something pretty magical and otherworldly about writing block or cursive letters and having them transform into digital text. By the year 2000, I had convinced most of my teachers that I could turn in typed work, but I probably saved future Serenity's penmanship from becoming truly atrocious because I had that Newton MessagePad. I was constantly writing and doodling with it.

Sixteen years later, the Newton lives again with my Dad (though probably in a storage box somewhere), and I've got both an iPhone and an iPad Pro to obsess over. But while there are many miraculous features I could demonstrate, handwriting recognition tricks have yet to make it to iOS.

Egg freckles and handwriting troubles

There are plenty of reasons why Apple hasn't yet implemented system-level handwriting recognition in iOS: For one, you need an exceptionally good pen input, and — until the introduction of the Pencil — no third-party stylus had the precision or the lag reduction to be a truly useful tool.

There's also both the processing power and stigma to consider. Like Siri, handwriting recognition works as a transcription service; in order for it to translate your physical marks into typed characters, however, it needs a substantial dictionary and the power to transcribe your words using your phone's processor or online servers. That means more cloud infrastructure or more powerful iOS devices — or both — as well as the engineers to craft a smart dictionary.

If you've ever dealt with Siri errors, imagine the problems that might result from poor handwriting, or trying to decipher the difference between lowercase, upper case, and cursive letters. And indeed, early Newton models got a lot of flack for mistranslating the written word, though later models — like my dear 2100 — saw significant improvement.

Master your iPhone in minutes

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

I also wonder, along those lines, if our collective penmanship hasn't become so disastrous that transcribing it into digital text would be an even bigger headache than previously imagined. Many primary schools no longer teach handwriting, and most of us write more words with a virtual keyboard than we do a pen and paper.

In the end, we're talking about a minor feature, hardly requested, that likely takes a backseat to all the more important projects Apple has in the pipeline — if the company's engineers are thinking about it at all.

Handwriting our way forward

But despite all the potential problems with handwriting recognition baked into iOS, there's a part of me that still wants to see the feature come to the iPad — even if it's only a beta baked into the Notes app.

We now have a top-tier stylus option for iPad in the form of the Pencil, and that option might be coming to smaller iPads soon. And there are system-level APIs in iOS that support great palm rejection, accuracy, and low-level latency.

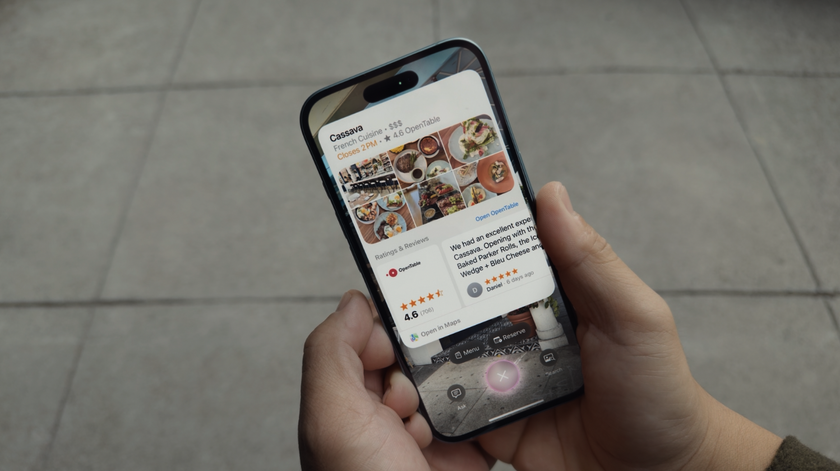

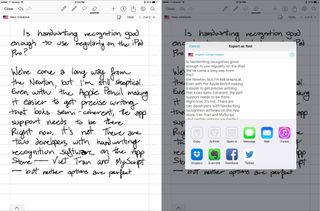

Additionally, the iPad Pro's beefy A9X should be more than enough to process the transcription necessary for handwriting recognition, and there are there are two companies out there to prove it: Both indie developer Viet Tran and handwriting recognition leader MyScript have apps on the App Store that perform handwriting recognition.

The apps worked quickly in my tests, but unfortunately neither supports the Pencil's palm rejection, lag reduction, or pressure sensitivity, leading to a sub-par writing experience. But if those apps incorporate proper support for the Pencil, the experience would be downright pleasant. Both apps' underlying transcription engines are impressive, and if my handwriting weren't so sloppy on account of lag, they might be even better.

Maybe the solution is just that: Let those the third-party apps take control of the handwriting recognition market, and Apple can continue to work on other, more important aspects of iOS (like drag-and-drop between Split View panes). I hope I'm wrong, though. I don't want handwriting recognition limited to a single app, or as an option that requires a third-party keyboard. I want system-level support.

And for all the reasons reasons not to add this feature to iOS, there are some pretty compelling reasons to do it, too.

If nothing else, Apple has some pretty good handwriting recognition software lying around: The company's aging Inkwell) technology is based off the original Newton Rosetta framework, and lets people with a connected tablet transcribe letters and draw pictures. It hasn't been officially updated in an age, but it's still supported as of OS X El Capitan, and the recognition engine is halfway decent.

There's also Apple's tablet competitors: Windows 10 has handwriting recognition built in to the Surface line, while Google released a handwriting keyboard for Android in mid-2015.

Knowing all that, I could see an updated version of Inkwell making its way into the Notes app in iOS 10, even if it were only a feature for those with Apple Pencils and compatible iPads. Apple put a renewed focus on Notes with iOS 9, and even if that was partially just to demonstrate the Apple Pencil's capability, what better way to expound on that utility than handwriting recognition?

In Notes, adding a button next to the Sketch tool that lets you transcribe your written words would be useful for buisness professions that prefer handwriting to typing. Anyone walking around with an iPad in their arms may elect to write over trying to finger-type in their notes — doctors were my first thought, but architects and teachers could certainly benefit, too. And the more users playing with Apple's software, the faster and quicker it could improve.

To handwrite or not to handwrite?

What side do you come down on, iMore? Is it worth Apple devoting resources to handwriting recognition at a system level, now that we have the Pencil? Should we leave it up to the app developers? Let me know what side you come down on; meanwhile, I'll be over here in the corner doodling Egg Freckles.

Serenity was formerly the Managing Editor at iMore, and now works for Apple. She's been talking, writing about, and tinkering with Apple products since she was old enough to double-click. In her spare time, she sketches, sings, and in her secret superhero life, plays roller derby. Follow her on Twitter @settern.