M1 — Apple's maniacal focus on silicon comes to the Mac

I hate backstory in columns. I just yell, "not today, Satan!" and skip to the actual substance. But, in this case, the backstory is actually important, dammit. Because one of several common misconceptions making the rounds right now is that M1, which is the marketing name for Apple's first custom system-on-a-chip for Macs, is… a rev A board. Something we should be worried or apprehensive about.

The truth is, it's actually 11th generation Apple silicon. Let me explain. No, there is too much. Let me sum up!

From A4 to 12Z

The original iPhone in 2007 used an off-the-shelf Samsung processor re-purposed from set-top boxes and the like. But the original iPad in 2010 debuted the Apple A4, the first Apple-branded system-on-a-chip. And that same Apple A4 also went into the iPhone 4 released just a few months later.

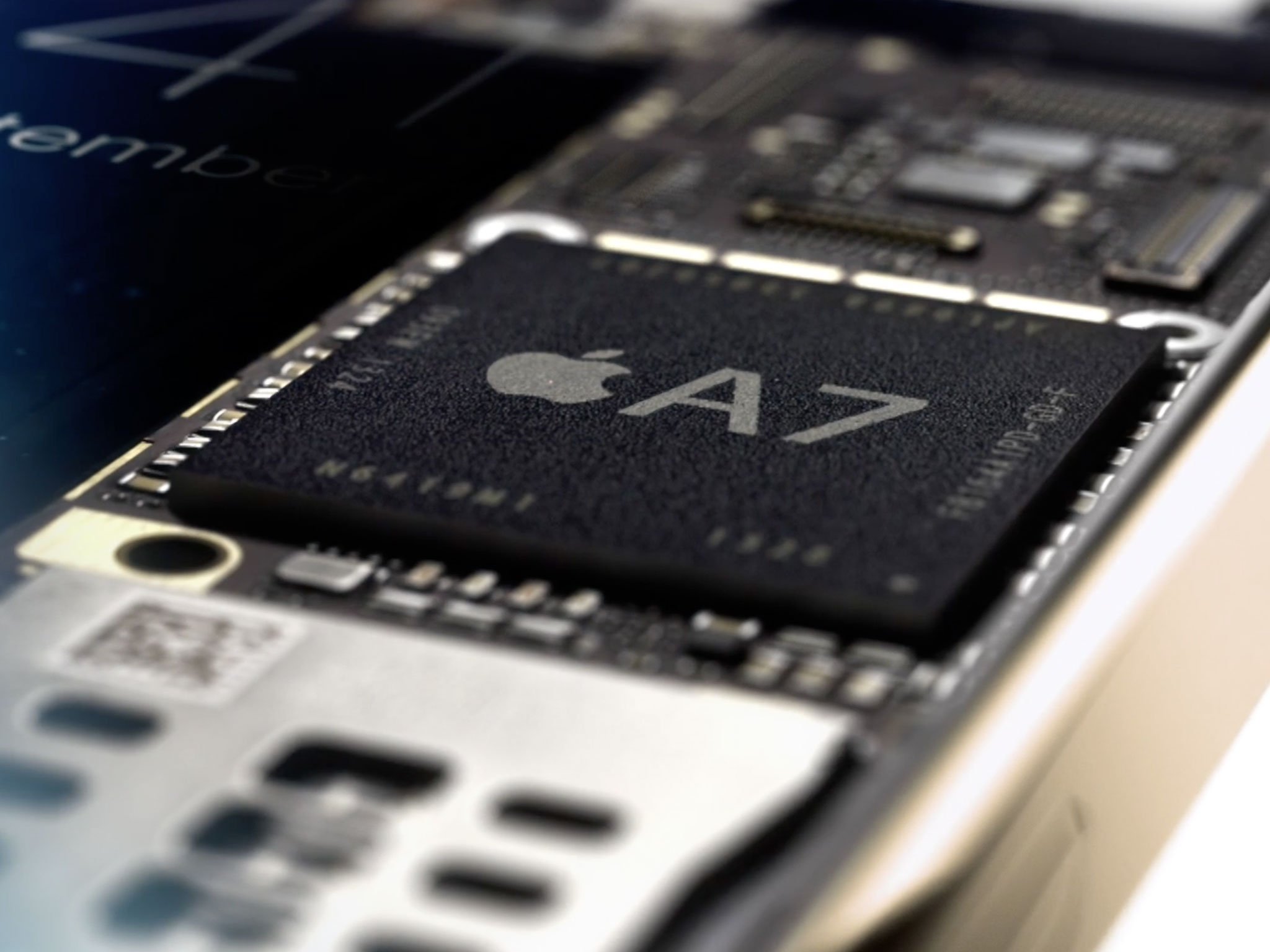

At first, Apple licensed ARM Cortex cores, but with the A6 in 2012, they switched to licensing just the ARMv7-A instruction set architecture, the ISA, and began designing their own, custom CPU cores instead. Then, with the A7 in 2014, they made the leap to 64-bit and ARMv8-A, not just with the more modern instruction set, but with a new, clean, targeted architecture that would let them start scaling for the future.

That was a huge wake-up call to the entire industry, especially Qualcomm, which was caught absolutely flat-footed, content up until that point to just sit at 32-bit and milk as much profit out of their customers as possible. But it was also just the kick in the apps they needed to start making mobile silicon really competitive.

Apple didn't let up, though. With the A10 Fusion in 2016, they introduced performance and efficiency cores, akin to what ARM markets as big.LITTLE, so that continued increases in power at the high end wouldn't leave a giant battery bleeding gap at the low end.

Apple had also begun making their own shader cores for the GPU, then their own custom IP for half-precision floating point to increase efficiency, and then, with the A11 in 2017, their first fully Custom GPU.

Master your iPhone in minutes

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

The A11 was also rebranded to Bionic. Because, in the early days, Apple had been leaning on the GPU for machine learning tasks, but that just wasn't as optimal or efficient as they wanted it to be. So, with the A11 Bionic, they debuted a new, dual-core ANE, or Apple Neural Engine, to take over those tasks.

And things just escalated from there until, now, today, we have the 11th generation of Apple silicon in the A14 Bionic, with its 4 efficiency cores, 2 performance cores, 4 Custom GPU cores, and 16 — 16! — ANE cores. Along with performance controllers to make sure each task goes to the optimal core or cores, ML controllers to make sure machine learning tasks go to the ANE, the GPU, or the special AMX or Apple Machine Learning Accelerator blocks on the CPU, media encode/decode blocks to handle heavier tasks like H.264 and H.265, audio signal processors for everything up to and including Dolby Atmos derived spatial audio, image signal processors for everything up to and including HDR3 and Deep Fusion, high-efficiency, high-reliability MVNE storage controllers, and the IP literally goes on and on.

In parallel, Apple had also been releasing beefed-up versions of these SoCs, starting with the iPad Air 2 and the Apple A8X in 2014, the X-as-in-extra-or-extreme. These versions had things like additional CPU and GPU cores, faster frequencies, heat spreaders, more and off-package RAM, and other changes designed specifically for the iPad and, later, iPad Pro.

Right now, those top out at the A12Z in the 2020 iPad Pro, which has 2 extra Tempest performance cores, 4 extra GPU cores, 2 extra GBs of RAM, and greater memory bandwidth than the A12 in the iPhone XS. And I say right now only because we haven't gotten an A14X yet. I mean, apart from the M1. Not really. But... kinda.

The silicon sword

Rumors of Apple Silicon Macs have been around basically for as long as Apple's been making silicon. Of iOS laptops and macOS ports. Of Apple dangling it over Intel's head like a silicon sword of Damocles to stress just how important — how overwhelmingly important — Apple's product goals were to them.

And the sad, simple truth is that it turned out not to be enough. As Apple kept up their cadence of A-series updates, each year, every year, for a decade, moving relentlessly, inexorably, to higher customization, higher performance efficiency, and smaller, and smaller die size — to TSMC's 7nm process with the A12 and now 5nm process in the A14, Intel… did the opposite. They stumbled, fell down, got up, ran into a wall, fell down again, got up, ran the wrong way, hit another wall, and now basically seem to be sitting on the floor, stunned, not sure what to do or where to go next.

They're just beginning to get their 10nm process successfully deployed for laptops, while they're once again going back to 14nm on the desktop and just throwing increased power draw at their problems. Which, one look at any of Apple's Mac computers would tell anyone, is the exact opposite of where they need to go.

Back in 2005, when Apple switched from PowerPC to Intel, Steve Jobs said it was about two things — performance per watt, and that there were Macs Apple wanted to make that they simply couldn't make if they stuck with PowerPC.

And that's the same reason Apple is switching from Intel to their own custom silicon today.

There are Macs that Apple wants to make that they simply can't if they stick with Intel.

Previously, it was enough for Apple to make the software and hardware and leave the silicon to Intel. Now, Apple needs to push all the way down to that silicon.

And, just like with the iPhone and iPad, Apple isn't a commodity silicon merchant; they don't have to make parts to fit into any generic computer, or support technologies they'd never use, like DirectX for Windows, they can make exactly, precisely, the silicon they really need to integrate with the hardware and software that really needs it. In other words, everything they've been doing with the iPhone and iPad for the past decade.

So, with all that in mind, a few years ago, a group of Apple's best and brightest locked themselves in a room, in a building, took a MacBook Air, a machine that had been suffering endless delays and disappointments thanks to Intel's anemic Y-Series Core M chips, and connected it to a very early prototype of what would become the M1.

And the rest… was about to make history.

The transition

The transition from Intel to Apple Silicon for the Mac was announced by Apple's CEO Tim Cook at WWDC 2020, who then handed it off to Apple's Senior Vice President of Hardware Technologies — essentially silicon — Johny Srouji, and senior Vice President of software — essentially operating systems — Craig Federighi, to expound upon.

Johny said that Apple would be introducing a family of systems-on-a-chip, or SoC, for the Mac line. That was important because Intel Macs have been using the traditional, modular, PC model, where the GPU could be integrated but could also be discrete, and the memory was separate, as was the T2 co-processor Apple had been using to work around some of Intel's… shortcomings. It was like… a bunch of charcuterie on a board. Where everything had to be reached for separately. The SoC would be like a sandwich, all layered tightly together, with the memory on-package and Apple Fabric as sort of the mayo that ties it all together, along with a really, really big cache that keeps it all fed.

Craig said that it would run a new generation of universal binaries compiled specifically for Apple silicon, but also Intel-only binaries through a new generation of Rosetta translation, virtual machines through hypervisor, and even iOS and iPadOS apps, their developers willing. Maybe just to take a little bit of the sting out of losing x86 compatibility with Windows and Boot Camp. At least at first.

And what's particularly funny is that back when Apple first announced the iPhone, some in the industry laughed and said pager and PDA companies had been making smartphones for years; there was no way a computer company could walk in and take away that business. But, of course, it took a computer company to understand that a smartphone couldn't be grown from a pager or PDA; it had to be distilled down from a computer.

Now, with M1, some in the industry laughed and said CPU and GPU companies had been powering laptops and PCs for years; there was no way a phone and tablet company could walk in and take away that business. Of course, it takes a phone and tablet company to understand that many modern PCs can't be cut down from hot, power-hungry desktop parts; they have to be built up from incredibly efficient, super low-power mobile parts.

And when that's what you do, the efficiency advantage holds true, and, more than that, it turns into a performance advantage.

And that's exactly what Apple's Vice President of hardware, John Ternus announced at Apple November One More Thing Event… and what Johny Srouji and Craig Federighi again expanded on… starting with M1.

A chipset that would let the MacBook Air, for example, run workloads that no one would have previously dreamed possible on Intel Y-Series. And with battery life to spare.

Silicon supersetting

When trying to quickly describe M1 in the past, I've used the shorthand of… imagine an A14X-as-in-extra-performance-and-graphics-cores++-as-in-plus-Mac-specific-IP.

And… I'm going to stick to that, although I think Apple would say the M-series for Mac is more of a superset of the A-series for iPhone and iPad.

For a long time now, Apple's been working on a scalable architecture, something that would let their silicon team be as efficient as their chipsets. And that means creating IP that could work in an iPhone, but also an iPad, even an iPad Pro, and eventually be repurposed all the way down to an Apple Watch.

This fall, for example, Apple announced both the iPhone 12 and iPad Air 4, both with the A14 Bionic chipset. And, sure, the iPhone 12 will hit something like the image signal processor far frequently and more often than the iPad Air will, and the iPad Air will use its bigger thermal envelope to better sustain higher workloads like long photo editing sessions, but that they're both perform so well on the same chipset rather than requiring completely different chipsets is a huge time, cost, and talent savings.

Likewise, the Apple Watch 6 on its S6 system-in-package is now using cores based on the A13 architecture, so advances in the iPhone and iPad benefit the Watch as well. And, at some point, we'll probably get an iPad Pro with an A14X as well.

Because making silicon for different devices is often prohibitively expensive. It's why Intel tablets are heavily performance gated even when they require fans and why Qualcomm is using twice-rehashed old phone chips.

That heavy investment in integrated, scalable architecture is what lets Apple cover all these products efficiently, without the complexity that would come from having to treat each one as a separate client.

And it also means M1 gets to leverage many of the same latest, greatest IP blocks as A14. Only the implementation differs.

For example, the compute engines are close to what a theoretical A14X would look like, 4 high-efficiency CPU cores, 4 high-performance CPU cores, 8 GPU cores, and twice the memory bandwidth and higher memory.

But the M1 CPUs can be clocked higher, and it has more memory. iOS hasn't gone beyond 6GB in the iPad Pro or latest iPhone Pros. But the M1 supports up to 16GB.

Then there are the Mac-specific IP. Things like hypervisor acceleration for virtualization, new texture formats in GPU for Mac-specific application types, display engine support for the 6K Pro Display XDR, and the Thunderbolt controllers which lead out to the re-timers. In other words, things the iPhone or iPad don't need… or currently just don't have.

It also means that the T2 co-processor is gone now because that was always really just a version of the Apple A10 chipset handling all the things Intel just wasn't as good at. Literally, a short series of chips Apple had to make and run BridgeOS on — a variant of watchOS — just to handle everything Intel couldn't.

And all of that is now integrated into the M1. And the M1 has the latest generation of all those IP, from the Secure Enclave to the accelerator and controller blocks, and on and on. The scalable architecture means it'll almost certainly stay that way as well, with all the chipsets benefitting from the advances and investments in any of the chipsets.

One silicon job

To figure out how to make proper, higher performance, high-efficiency silicon for the Mac, Apple did… exactly what they did to figure out how to make it for the iPhone and iPad. They studied the types of apps, and workloads people were already using and doing on the Mac.

That involves Johny Srouji and Craig Federighi sitting in a room and hashing out priorities based on where they are and where they want to go, all the way from the atoms to bits and back again.

But it also involves testing a ton of apps, from popular to pro, Mac-specific, and open source, and even writing a ton of custom code to throw at their silicon, to test and try and anticipate apps and workloads that may not exist yet but are reasonably assumed to be coming next.

On a more granular level, Apple can use its silicon to speed up the way code runs. For example, retain and release calls, which are frequent in both Objective-C and Swift, can be accelerated, making those calls shorter, which makes everything feel faster.

Previously, I joked that the silicon teams' one job was to have iPhones and iPads run faster than anything else on the planet. But it's not really a joke and is kind of actually less specific than that — their job is to run faster than anything else on the planet, given the thermal enclosure of whatever device they're designing against. That's what drives their… maniacal focus on performance efficiency. And now that just so happens to include the Mac.

Not M for magic

There's no magic, no pixie dust in the M1 that lets the Mac perform in ways that just weren't previously possible. There are just good, solid ideas and engineering.

For example, just powering up a core on a low-power Intel system might burn 15 watts of power; on a higher-end system, maybe 30 watts or more. That's something… unimaginable for an architecture that comes from the iPhone. In that tiny, tiny box, you're allowed single-digit burn, nothing more.

That's why, with previous Intel Y-Series MacBooks, the performance was so gated so always.

Intel would use opportunistic turbo to try and take advantage of as much of the machine's thermal capacity as possible. But frequency requires higher voltage, much higher voltage, which draws more power and generates more heat.

Intel was willing to do this, goose frequency and voltage, in exchange for bursts of speed. It absolutely let them eke out as much performance as thermally possible and post as big a set of numbers as possible, but it often just wrecked the experience. And turned your desktop into a coffee warmer. And your laptop into a heat blanket.

With M1, there's no opportunistic turbo, no need for it at all. It doesn't matter if it's in a MacBook Air or a MacBook Pro or a Mac mini. M1 just never forces itself to fill out the thermal capacity of the box.

The silicon team knows exactly the machines they're building for, so they can build to fill those designs not as maximally as possible but as efficiently.

They can use wider, slower cores to handle more instructions at lower power and much less heat.

That let them do things like increase the frequency of the e-cores in the M1 to 2GHz, up from 1.8, I think, on the A14, and the p-cores to 3.2GHz, up from 3.1GHz on the A14.

This is why Apple has an efficiency-performance architecture, what other companies market as big/little — they want to keep pushing performance at the high end without losing efficiency at the lower end. Still, the efficiency cores just keep getting more and more capable.

Just the four efficiency cores in M1 delivers performance equivalent to the Intel Y-series processor that was in the previous generation MacBook Air. Which, ouch.

So, now, you have all the M1 chipsets in all the M1 machines capable of running at the same peak frequency.

The only difference is the thermal capacity of those machines. The MacBook Air is focused on no fan, no noise. So, for low power, lower workloads, single-threaded apps, it's performance will be the same as all the other M1 Machines.

But, for higher power, higher workloads, heavily treated apps, sustained for 10 minutes or longer, things like rendering longer videos, doing longer compiles, playing longer games, that's where the thermal capacity will force the MacBook Air to ramp down.

What that means is, for a single core, M1 is not thermally limited. Even pushing the frequency, it's perfectly comfortable. So, for many people and a lot of workloads, the MacBook Air's performance will be almost indistinguishable from… the Mac mini.

For people with more demanding workloads, if they heat up the MacBook Air enough, that heat will go from the die to the aluminum heat spreader, then on into the chassis, and if the chassis gets saturated, the control system will force the performance controller to pull back on the CPU and GPU and reduce the clock speeds.

Where, on the 2-port MacBook Pro, the active cooling system would kick in to allow those workloads to sustain for longer, and on the Mac mini, it's thermal envelope and active cooling would basically just let the M1 sustain indefinitely at this point.

But it also means that now even the MacBook Air is suddenly a really high-performance system because Apple no longer has to cram a 40 or 60-watt design into a 7-10 watt chassis. M1 lets the Air be the air, with performance enabled by its efficiency.

Unified Memory

One of the other big misconceptions… or maybe just confusions?… about M1 is unified memory. Apple has been using on the A-Series chipsets for a long while now and something very different from the dedicated — and separate — system and graphics memory of the previous Intel machines.

What unified memory basically means is that all the compute engines, the CPU, GPU, ANE, even things like the image signal processor, the ISP, all share a single pool of very fast, very close memory.

That memory isn't exactly off the shelf, but it's not radically different either. Apple uses a variant of 128-bit wide LPDDR4X-4266, with some customizations, just like they use in the iPhone and iPad.

It's the implementation that offers some significant advantages. For example, because those Intel architectures have separate memory, they weren't exactly efficient and could waste a lot of time and energy moving or copying data back and forth so it could be operated on by the different compute engines.

Also, in low power, integrated systems like the MacBooks and other ultrabooks, there typically wasn't a lot of video RAM, to begin with, and now the M1 GPUs have access to far greater amounts from that shared pool, which can lead to significantly better graphics capabilities.

And because modern workloads aren't as simple as draw call send-it-and-forget it anymore, and computational tasks can be round-tripped between the different engines, both the reduction in overhead and increase in capability really, really start to add up.

That's especially true when coupled with things like Apple's tile-based deferred rendering. This means, instead of operating on an entire frame, the GPU operates on tiles that can live in-memory and be operated on by all the compute units in a far, far, far more efficient way than traditional architectures allow. It's more complicated, but it's ultimately higher performance. At least so far. We'll have to see how it scales beyond the integrated graphics machines and into the machines that have had more massive discrete graphics up until now.

How much that translates into the real world will also vary. For apps where developers have already implemented a ton of workaround for the Intel and discrete graphics architectures, especially where there hasn't been a lot of memory before, we may not see much impact from M1 until those apps get updated to take advantage of everything M1 has to offer. I mean, other than the boost they'll get just from the better compute engines.

For other workloads, it could well be night and day. For example, for things like 8K video, the frames get loaded quickly off the SSD and into unified memory, then, depending on the codec, it'll hit the CPU for ProRes or one of the custom blocks for H.264 or H.265, have effects or other processes run through the GPU, then go straight out through the display controllers.

All of that could previously have involved copying back and forth through the sub-systems, just all shades of inefficiently, but now it can all happen on an M1 machine. An ultra low power M1 machine.

Unified memory won't suddenly turn 8GB into 16GB or 16GB into 32GB. RAM is still RAM, and macOS is still macOS.

Unlike iOS, macOS doesn't deal with memory pressure by jettisoning apps. It has memory compression and machine-learning-based optimizations, and ultra-fast SSD swap — which, no, won't adversely affect your SSD any more today than it has for the last 10 years or so Apple and everyone else has been doing it.

But the architecture and software will make everything just feel better — make that RAM be just all that it can be.

Rosetta2

One of the problems Apple faced with moving to the M1 was that some apps weren't going to be available as unified binaries, not in time for launch, and maybe not for a good long time.

So, where they had the original Rosetta to emulate PowerPC on Intel, they decided to create Rosetta 2 for Intel on Apple Silicon. But, Apple had no direct control over the Intel chips. They could push Intel into making chips that would fit into the original MacBook Air, but they couldn't get them to design silicon that would run PowerPC binaries as efficiently as possible.

Well… Apple has direct control over Apple Silicon. They had years for the software team to work with the silicon team to make sure M1 and future chipsets would run Intel binaries absolutely as efficiently as possible.

Apple hasn't said much about what exactly they're doing in terms of specific Rosetta2 accelerating IP, but it's not hard to imagine that Apple looked at areas where Intel and Apple Silicon behaved differently and then built-in extra bits specifically to anticipate and address those differences as efficiently as possible.

That means there's nowhere nearly the performance hit there'd otherwise be with a traditional emulation. And, for Intel binaries that are Metal-based and GPU bound, because of M1, they can now run faster on these new Macs than the Intel Macs they replaced. Which.. takes a moment to wrap your brain around.

Again, no magic, no pixie dust, just hardware and software, bits and atoms, performance and efficiency working incredibly closely together, smart choices, solid architecture, and systematic, steady improvements year after year.

The philosophy

There's this other misconception, maybe reductionist, maybe myopic, where people are just looking for one thing that explains the difference in performance efficiency pretty much every test has now shown between M1 Macs and the same exact Intel machines they replaced — often than even much higher-end Intel machines. And there just isn't one thing. It's everything. The entire approach. Each part perfectly obvious in hindsight, but the result of a lot of big architectural investments paying off over a lot of years.

I know a lot of people dunked on Apple's Bezos-style graphs during the M1 announcement, even called it a lack of confidence on Apple's part… even though Apple was basically comparing against the top-end Tiger Lake part at the time, then basically walked over and just dropped their own M1 die shot right on the table, right after the event, which is about as confident as you can get for a new PC silicon platform.

But those graphs were still based on real data and showed the true philosophy behind M1.

Apple wants to make balanced systems, where CPU and GPU performance complement each other, and the memory bandwidth is there to support them.

They don't care about Deadpool-style MAXIMUM PERF in terms of a spec sheet number, not if it comes at the expense of efficiency. But, because of the efficiency, even modest increases in performance can feel significant.

They're not architecting for the number, for the highest right point on those graphs, but for the experience. But they're opportunistically getting that number and a pretty good point on those graphs as well. At least so far on these lower power chipsets. By making them the most efficient, Apple has ended up making them the higher performance as well. It's a consequence of the approach, not the goal.

And it pays off in experience, where everything just feels far more responsive, far more fluid, far more instantaneous than any Intel Mac has ever felt. Also in battery life, where doing the same workloads results in mind-bogglingly less battery drain.

You can just hammer on an M1 Mac in ways beyond which you could ever hammer on an Intel Mac and still end up with way better battery life on M1.

Next silicon steps

M1 was built specifically for the MacBook Air, the 2-port MacBook Pro — which I've semi-jokingly referred to as the MacBook Air Pro — and a new, silver-again, lower power Mac mini. I think that last one mostly because Apple exceeded even their own expectations and did it because they realized they could do it and not force desktop stans to wait until a more powerful chip was ready for the more powerful space gray models.

But there are more than just these Macs in Apple's lineup, so even though we just got M1, the moment after we got it, we were already wondering about M1X, or whatever Apple calls what comes next. The silicon that'll power the higher-end 13 or 14-inch MacBook Pro and the 16-inch, that space gray Mac mini and at least the lower-end iMac as well. And beyond that, the higher-end iMacs and eventual Mac Pro.

Sometime within the next 18 months, if not sooner.

As impressive as the M1 chipset is, as Apple's 11th generation scalable architecture has performed, it is still the first custom silicon for the Mac. It's just the beginning: the lowest-power, lowest-end of the lineup.

Because Johny Srouji's graphs weren't marketure, we can look at them and see how exactly Apple is handling performance efficiency and where the M-series will go as it continues up that curve.

Back at WWDC, Johny said a family of SoCs, so we can imagine what happens when they cruise past that 10-watt line when they go beyond eight cores to 12 or more.

Beyond that, does this mean that Apple's M-series, and the Macs they power, will be kept as up-to-date as iPads have, getting that latest, greatest silicon IP the same year or shortly thereafter? In other words, will M2 follow as quickly as A15, and so on?

Apple's silicon team doesn't get to take a year off. Every generation has to improve. That's the downside of not being a merchant silicon provider, of not just targeted peak performance on paper or having to hold back on the top line just to increase the bottom line.

The only things Apple is ever willing to be gated by is time and physics, nothing else. And they have 18 months left just to get started.

Rene Ritchie is one of the most respected Apple analysts in the business, reaching a combined audience of over 40 million readers a month. His YouTube channel, Vector, has over 90 thousand subscribers and 14 million views and his podcasts, including Debug, have been downloaded over 20 million times. He also regularly co-hosts MacBreak Weekly for the TWiT network and co-hosted CES Live! and Talk Mobile. Based in Montreal, Rene is a former director of product marketing, web developer, and graphic designer. He's authored several books and appeared on numerous television and radio segments to discuss Apple and the technology industry. When not working, he likes to cook, grapple, and spend time with his friends and family.