Siri Shortcuts: The next leap forward in push interface

Some still assume Siri is only a voice-based assistant. It was initially but, since iOS 9, it's also been a text-based assistant. That's when the name Siri also began to be used for proactive, intelligent, search and suggestions.

Then, when Apple announced Siri Shortcuts, some assumed it was only a power-user feature that would let nerds assign voice triggers to actions and automations. While that's true, it's only half the story. In addition to the power-user aspect, there's an equally important — potentially even more important — aspect to Siri Shortcuts that's all about the mainstream.

I'd go so far as to say it's best to think of Shortcuts as two separate if related services:

- Proactive prompting for mainstream users that'll gently lead them into greater convenience they never knew they needed.

- Voice trigger-able automation for nerds that'll let them do much of what they've always wanted.

And, of the two, I think the first will not only be super easy to get started with — it'll be transformative.

From pull to push

For years we've been slowly, inexorably transitioning from pull to push interface.

Pull interface is what most of us are familiar with going back to the early days of computing: If you want to do something, you go find the feature or app that lets you do it, then the action within that feature or app, and only then can you do it — after spelunking through the operating system and app stack, and hunting it down. It imposes both temporal and cognitive load and it's part of what's made traditional computing confusing and even off-putting to large swaths of people.

Push interface is the opposite: Instead of you drilling down to find what you want, what you want comes and finds you. Notifications are a rudimentary example. Instead of launching a messages app, finding a conversation thread, and checking to see if there's anything you need to reply to, the notification comes and tells you there's something new to reply to. Actionable notifications were another step forward. Instead of hitting a notification and going to an app, you could act right in the notification.

Master your iPhone in minutes

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

But notifications are only driven by simple, event-based triggers. Someone sends you a message. An alarm or reminder goes off. A sports score or news happens. They're reactive.

What Apple did with Siri was an attempt to become proactive.

It built on Continuity and Extensibility, two iOS 8 features that remain among the most important ever added to the platform. The former, among other things, let apps bookmark and sync user activity. The latter, access that activity securely from other parts of the system and even other apps.

Just like websites were unbundled into web API that could be called from other sites or from within apps, binary app blobs were unbundled into discrete functionality that could be deep linked into, surfaced in share sheets, called from other apps, and even projected onto other hardware.

With it, Siri could suggest apps and even actions within apps based on signals like time, location, and past behavior.

Wake your iPhone and you might see it offer to play you your workout mix, if that's usually how you start your day. Or swipe to the minus one Home screen and see directions to the dinner you'd reserved along with traffic information to help you pick your departure time.

It was useful but it was extremely limited.

What Apple's done with iOS 12 and Siri Shortcuts is to start removing those limitations.

Not with automation. Again, that's for the power users. But with suggestions. Those are for the mainstream.

Shortcutting to Shortcuts

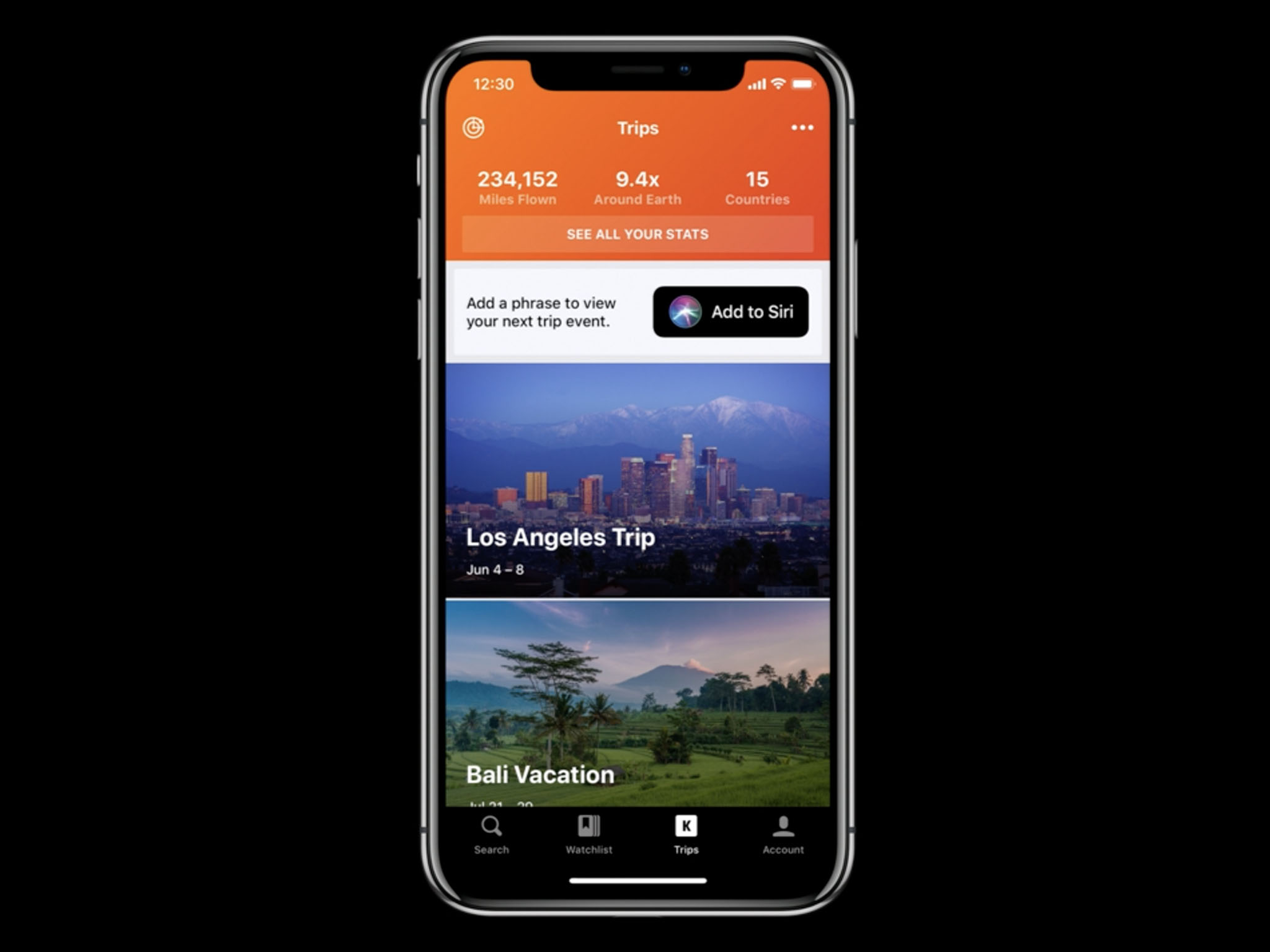

Developers can tap into the Continuity-derived user activity to make locations available within their apps. And they can use a new Intents API to let the system know, more expansively, the actions available in the app.

Once that's done, Siri keeps track of what you do with them and when you do it, and tries to guess when you'll do it next. Then, instead of you having to go find the app and dig down into the function you want, it presents it to you right before it thinks you'll want it, and right where you already are in the operating system.

For example, if you always order pizza before the game on Sunday, instead of having to go to the pizza app, pick your favorite, and place your order, it'll have a banner waiting for you right on your Lock screen ready with your favorite order.

If you always text your child to say you're on your way home from work, instead of having to go to messages, find the conversation with your child in the list, and tap to start a new message, a banner will be waiting for you, ready and able to send that message with a single tap.

Sure, if you want to, you can add a voice trigger to any shortcut. "Pizza time!" or "Homeward bound" could let you initiate those actions any time you want. It effectively lets you add Skills-like functionality without having to navigate an endless list of Skills you wouldn't ever want or need. (Downloading an app indicates you're interested in Skill-like functionality related to that app, effectively giving you a much more manageable, relevant list from which to choose.)

And that's really cool. It's sci-fi. But it's still pull-based interface.

It's the new engine that will help mainstream users discover shortcuts, at the right time, in the right context, that's powerful. That's push-based. And they couldn't be easier to discover and get started with.

Pushing the limits

I've been using the iOS 12 beta for a week now. In that time, my Lock screen has offered to put my phone into Do Not Disturb when a Wallet pass, Open Table, and even simply iMessage indicated I might be having dinner or breakfast.

I hasn't offered to let me order my usual Philz Mint Mojito, because I don't have the Shortcuts enabled version of that app — yet! — but it has offered me directions to Philz after I used Maps for walking directions the first couple days of the conference.

Come this fall, apps will be able to suggest common or appropriate Shortcuts as well, right on the launch screen.

Combined with Lock screen, there will be a ton of Shortcuts suggested for a ton of users, and the same hundreds of millions of people who learned to download apps, and all the initial overhead that involved, will learn how to take advantage of Shortcuts.

And we'll be another step closer to the next big revolution in human interface.

Rene Ritchie is one of the most respected Apple analysts in the business, reaching a combined audience of over 40 million readers a month. His YouTube channel, Vector, has over 90 thousand subscribers and 14 million views and his podcasts, including Debug, have been downloaded over 20 million times. He also regularly co-hosts MacBreak Weekly for the TWiT network and co-hosted CES Live! and Talk Mobile. Based in Montreal, Rene is a former director of product marketing, web developer, and graphic designer. He's authored several books and appeared on numerous television and radio segments to discuss Apple and the technology industry. When not working, he likes to cook, grapple, and spend time with his friends and family.