Apple Vision Pro sensors: What they all do, and where they are on the headset

The new Vision Pro contains a staggering 20 sensors — let’s find out why.

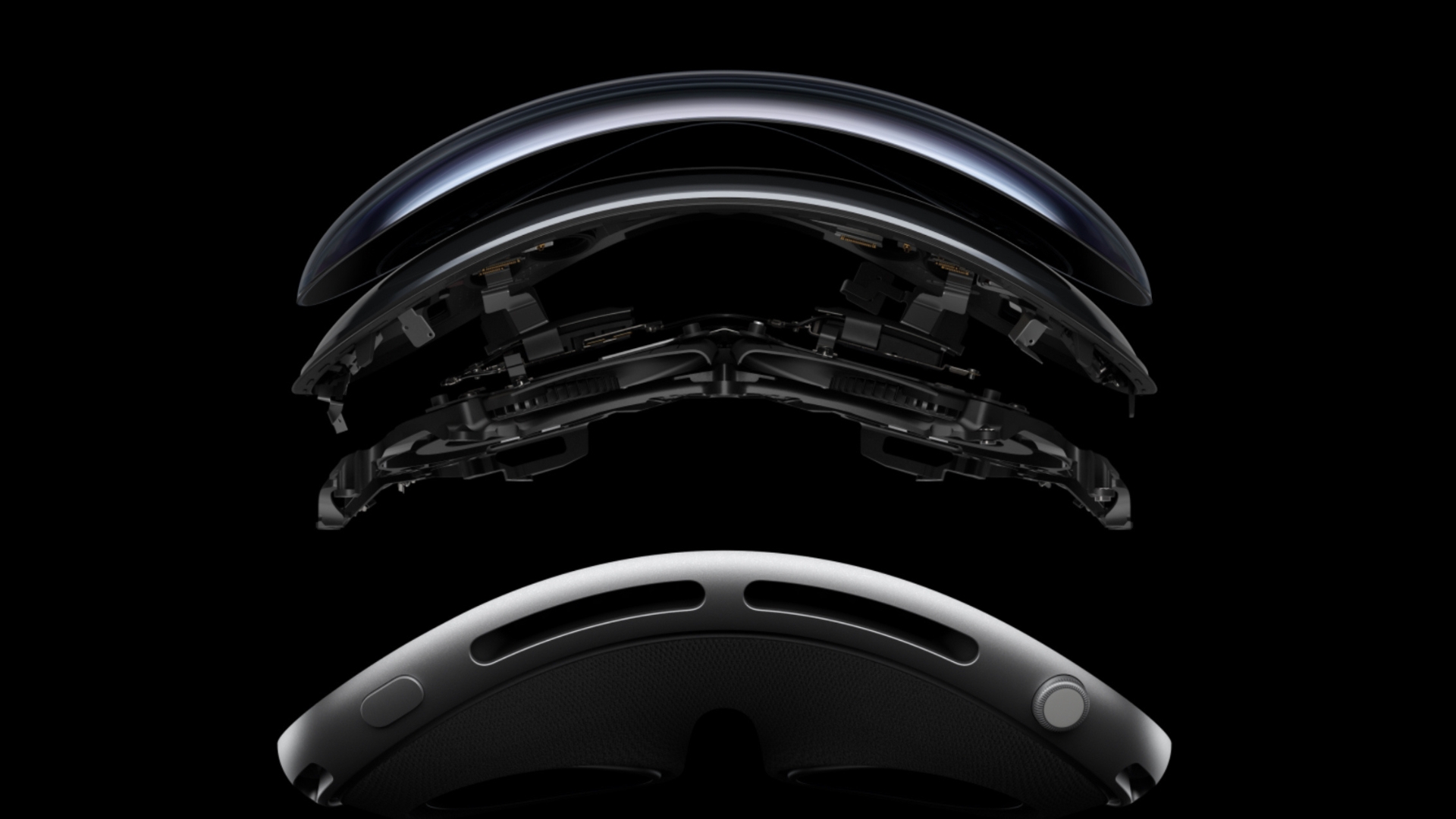

The world is currently being wowed by Apple’s new revolutionary Vision Pro AR/VR headset, which manages to simultaneously look like a bit of tech from 10 years in the future, and a pair of run-of-the-mill ski goggles from the ‘90s. But with the Vision Pro, it’s not the outside that counts - it’s the insides where all the action is happening, and it’s the sensors that it contains that make it possible for it to usher in the era of spatial computing and be the game changer that Apple hopes it will be.

Here, we’re going to take a deep dive into how the Vision Pro works its magic using sensors to track you and what they are all capable of doing.

Tracking Cameras

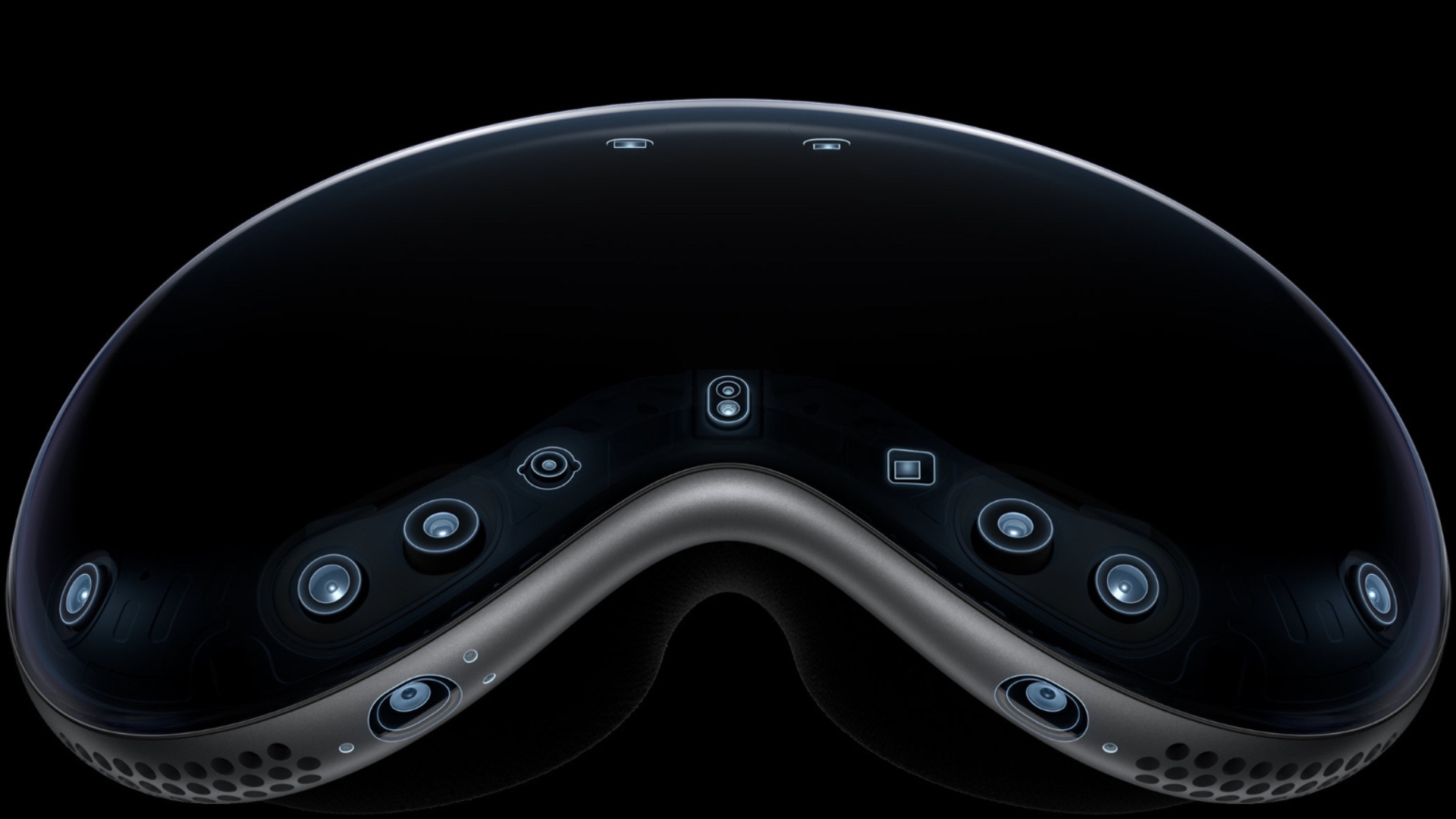

First, let’s take a look at the cameras on the outside of the device. These are the two high-resolution main cameras and six world-facing tracking cameras that sense your environment in stereoscopic 3D. If you’re familiar with Vision Pro at all, you’ll know that it can take photos and video of what you’re looking at, and that’s what the main cameras are used for. Vision Pro’s main Home View interface (which you use to access all your apps and experiences) is navigated by a combination of eye movement and hand gestures. You simply look at an icon and tap your fingers together to select. That’s why there are so many tracking cameras facing the outside world so that Vision Pro can detect even your tiniest finger movements. To make a photo you’re looking at bigger, for example, you simply look at the corner of the photo and pinch and drag with your fingers - that motion with the hand and arm is pretty small, but Vision Pro will detect it thanks to the six tracking cameras.

Now let's take a look at inside the device. Eye-tracking (not EyeSight — that’s something different) is obviously very important to Vision Pro. It needs to be able to discern eye movement inside the headset, and this is done by four Infrared internal tracking cameras that track every movement your eyes make. So, thanks to these four cameras, if you simply choose to look right at an icon in the Home View interface, it is selected. But the Vision Pro also contains another internal camera, a TrueDepth camera, so that it can authenticate the user. TrueDepth cameras were first introduced in the iPhone for FaceID and contain some of the most advanced hardware and software Apple has ever created for capturing accurate face data. Thousands of invisible dots are projected on your face to create a depth map, and an infrared image is also captured. Now, whether Vision Pro is doing this to your whole face or just your eyes, we’re not sure since it uses iris-based biometric authentication, but it’s certainly using TrueDepth technology to help it do it.

LiDAR scanner

LiDAR is another technology that Apple has already used on the best iPhones and iPads in the past. LiDAR stands for Light Detection and Ranging, and it emits a laser to measure accurately how far away surrounded objects are. Apple claims the LiDAR scanner in the iPhone enables it to take better photos in the dark by helping the camera autofocus six times faster, so you don’t miss a great shot.

You don’t have to do anything for this to happen - you just aim your camera in the dark and take a shot. Apple also has its free Measure app that can make use of the LiDAR scanner to measure distances for you. So, what is it doing in the Vision Pro? Well, Vision Pro makes use of your surroundings to float the Home screen over, so it needs to know how close you are to walls and furniture. All this is achieved using its LiDAR scanner.

Four internal measure units

Internal Measurement Units (IMUs) are typically used in drones. They are devices that provide internal sensors like gyroscopes and accelerometers to provide acceleration and orientation data so you can measure pitch, roll, and yaw. They can also be used to calculate position and velocity. Vision Pro is using these to maintain its sense of where it is in the world, after all, you can’t expect people to only stay in one place when using mixed reality.

Master your iPhone in minutes

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

Flicker sensor

A flicker sensor was first introduced by Apple way back in the iPhone 7. It reads the flickering of artificial light and can compensate for it in photos and videos you take. Since the Vision Pro is primarily used inside in environments that have artificial light, you can see why Apple would need one here so that when you see the world around you through the Vision Pro headset, there’s no flickering occurring from artificial light.

Ambient light sensor

The final sensor on the Apple Vision Pro is the ambient light sensor. An ambient light sensor simply adjusts the screen brightness to match the surrounding area, and you find them in all sorts of Apple products, like Apple Watch and iOS devices, but the most famous use is for lighting up your MacBook keyboard once it gets dark. Vision Pro is clearly going to need one because it needs to know how bright to make the display, depending on whether it's being used in daylight or dark environments.

Microphones?

Finally, we need to think about microphones because although Apple remains tight-lipped about what the six microphones inside Vision Pro contain, they are probably being used for more than just voice commands in a way that makes them almost sensors. They are most likely performing an acoustic scan of the room to help facilitate the illusion of augmented reality and to keep sounds in the same place when you turn your head.

We'll update this section and the rest of the article with further information on the Vision Pro sensors once we find out more.

Graham is the Editor in Chief for all of Future’s tech magazines, including Mac|Life, MaximumPC, MacFormat, PC Pro, Linux Format and Computeractive. Graham has over 25 years of experience writing about technology and has covered many of the big Apple launches first hand including the iPhone, iPad and Apple Music. He first became fascinated with computing during the home computer boom of the 1980s, during which he wrote a text adventure game that was released commercially while still at school. After graduating university with a degree in Computer Science, Graham started as a writer on Future’s PC magazines eventually becoming editor of MacFormat in 2004 then Editor in Chief across the whole of Future’s tech magazine portfolio in 2013.These days Graham enjoys writing about the latest Apple tech for iMore.com as well as Future’s tech magazine brands.