Why Apple is putting Dolby Vision cameras on the iPhone 12

When scuttlebutt started spreading about Apple working on a "Dolby Vision" camera for the iPhone 12, my mind immediately started racing.

https://twitter.com/reneritchie/status/1315815991260184576

See, several years ago, during Apple's World Wide Developer Conference (WWDC), I was invited to Dolby to see their then-new Vision system.

They showed us the trailer for Star Wars: The Last Jedi in both 4K and 1080p. The 4K was SDR or standard dynamic range. The 1080 was HDR or high dynamic range.

And it was no contest — the 1080p HDR just blew the 4K SDR out of the skies and straight back to Mos Eisley. And Dolby knew it. Their team had been smiling the whole time.

Toxic Spec Syndrome

We saw something similar back when Apple announced the iPhone XR and a few tech nerds facepalmed over "not even 1080p in 2018". Then, presumably, double facepalmed when the iPhone 11 kept the same display in 2019.

But both went on to be the best selling phones of their respective years. Why? No, not because the general public or mainstream consumers are stupid. Far from it. En masse they're way smarter than any tech nerd.

Master your iPhone in minutes

iMore offers spot-on advice and guidance from our team of experts, with decades of Apple device experience to lean on. Learn more with iMore!

Because they intrinsically understood the same thing super-tech nerds did — displays were more than the size of any one aspect.

You can have really high density, just a ton of pixels, sure. But if they're crappy pixels, it's just a waste of time and materials.

It's the damn megapixel camera race all over again.

Quantity vs. quality

Apple has understood this for years. Once you reach a sufficient density at a certain size and distance — what Apple calls Retina — the human eye can't distinguish the individual pixels, so cramming even more in is just… yeah, a waste. So, they went 2x Retina for LCD, 3x for OLED, and then they went to work on other things like uniformity, color management, wide gamut, and more.

Dolby understood that as well. So, also instead of just more, they went to work on making better pixels. In this case, once the density was high enough, they worked on the dynamic range. How deep and inky they could make the blacks, who bright and detailed they could keep the whites, and how wide they could show all the colors, rich reds and vibrant greens, in between.

HDR10 vs. Dolby Vision

Now, there are several HDR standards out there. Because of course there are.

HDR10 is… the default. It's an open standard and what everyone who just wants to check a box supports. The problem with HDR10 is that it optimizes for the entire video at once. And that means, if some scenes are darker or brighter, especially significantly so, HDR10 just won't do a good job optimizing for them. You get, in essence, the lower common high dynamic range denominator.

What Dolby Vision does is encode the meta data dynamically, so if a scene shifts, even and especially significantly, that metadata can shift too. As a result, you get a better representation not just of the video but throughout the video. (Dolby VIsion can also go well beyond the color depth of HDR10 but I'm not focusing on that because Apple is only using 10-bit for now.)

It's like tweaking every photo you took during the day separately, individually, according to the needs of each photo, as opposed to just applying a standard batch filter to all of them at once.

If you have Apple TV+ or Disney+, as well as a good OLED TV, like one of the more recent LG models, then you're likely more than familiar already with what Dolby Vision can and does look like, and the huge difference between proper HDR and older fashioned SDR.

Why this, why now?

So, if Dolby Vision is so great, why doesn't everything use it?

Well, first, you have to license it from Dolby, which costs money. That's why some companies use HDR10 still or the newer, better, freely licenseable HDR10+.

Second, you have to compute it, which used to require special cameras, often dual exposures, and a beefy editing rig to put it — and output it — all together.

Now, as of this week, Apple is doing it on a phone. On. A. Phone.

And in 10-bit. For context, most cameras record in 8-bit. I record my videos in 10-bit. RAW is typically 12+-bit. It means more data for the video so if you need to fix white balance or exposure or saturation in post, you have much more data to work with.

That's obvioulsy a ton more data to process, but the iPhone 12 can handle it. Thanks to the A14 Bionic system-on-a-chip, or SoC, which is pulling all that data off the camera sensor, crunching it, adding the Dolby Vision metadata, and saving it all in real time. In. Real. Time.

It's the first time Apple has compute engines capable of doing it. And it's legit mind blowing.

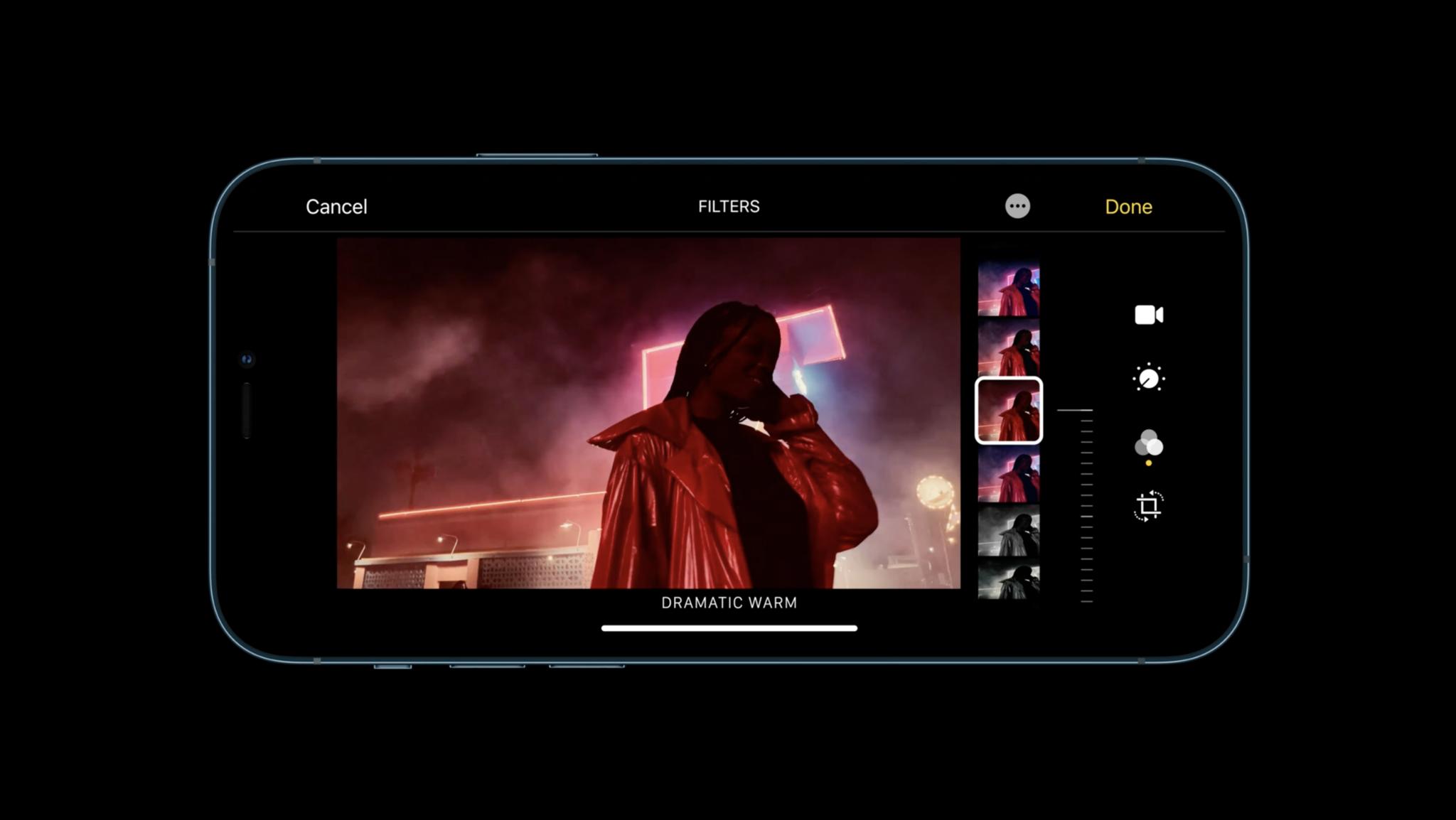

Even more so because Apple is also letting you edit the footage, even apply filters, and then re-computing the Dolby Vision data on the fly. On. The— Fine, I'll stop that now.

iPhone 12 vs. iPhone 12 Pro

Alas, Apple has not implemented all Dolby Vision support equally.

Probably because of the difference in memory between the iPhone 12 and the iPhone 12 Pro models, the former is limited to Dolby Vision 4K at 30fps while the latter can go to Dolby Vision 4K at 60fps.

That means you can get either incredibly silky smooth video or re-time later when editing to get even more incredibly, silky-smoother 50% or 40% slow motion. (I go for 40% because I edit in 24fps like Hollywood and nature intended. You edit you.)

HDR in an SDR world

There are some drawbacks, though. Namely, this is still cutting edge, maybe even bleeding edge stuff.

Once you shoot your gorgeous Dolby Vision video, you may be limited to watching it on an iPhone or Android phone with an HDR display. If you have a good HDR television, maybe you can beam it there too.

But a lot of services and devices still have problems rendering HDR content to non-HDR displays. For example, if you upload an HDR video to YouTube, and someone tries to watch it in SDR, the results can be… really bad.

Getting out to push

It's my hope, though, that this will be yet another case of Apple getting out and pushing. Using their massive scale to just force the state of the art of technology forward. Like going USB on the iMac, like putting multitouch on the iPhone, like making AirPods.

It'll just result in everyone, especially YouTube, just figuring out how to handle high dynamic range the way they already so easily handle high resolution.

My hope that, by putting Dolby Vision cameras into hundreds of thousands of hands, Apple will make HDR just work. For all of us.

And then I'll be standing there smiling next to Dolby.

Rene Ritchie is one of the most respected Apple analysts in the business, reaching a combined audience of over 40 million readers a month. His YouTube channel, Vector, has over 90 thousand subscribers and 14 million views and his podcasts, including Debug, have been downloaded over 20 million times. He also regularly co-hosts MacBreak Weekly for the TWiT network and co-hosted CES Live! and Talk Mobile. Based in Montreal, Rene is a former director of product marketing, web developer, and graphic designer. He's authored several books and appeared on numerous television and radio segments to discuss Apple and the technology industry. When not working, he likes to cook, grapple, and spend time with his friends and family.